To improve your speed to value, improve your speed to learning

When was the last time you saw something that genuinely inspired you? The answer to that question is a good indication of how much you’re investing in experimentation—and it provides a surprisingly accurate view of how quickly your team will be able to deliver value.

I found myself in awe a few weeks ago when I stayed at Alexander Graham Bell’s house in Nova Scotia. This is a space he created for innovation and experimentation. It’s where he did his research into tetrahedral kites that would result in the first powered flight in the British Empire.

Here is one of the paper prototypes.

© Martina Hodges-Schell

This simple prototype eventually led to this.

© https://en.wikipedia.org/wiki/AEA_Silver_Dart

Looking through these photos, you can see a trajectory from the small experiments to the large, fully functioning prototype.

© https://publicdomainreview.org/collection/alexander-graham-bell-s-tetrahedral-kites-1903-9/

Bringing the inventor’s mindset into the modern age

These images tell a story that we can apply to our work today.

Are you feeling pressure to harness AI to improve productivity or innovate your products and services? For the overwhelming majority of business leaders, the answer is yes.

But we’re often getting lost in the technology and forgetting about those hard-earned lessons from the great inventors—this is why 95% of AI investments have failed to deliver the expected return on investment.

Here's the pattern I'm seeing: Teams are using AI to shortcut the learning process itself. They're asking AI to generate customer personas, validate assumptions, even simulate user feedback. But this is precisely backwards. AI can accelerate many things, but it cannot replace first-hand observation of real humans struggling with real problems.

Alexander Graham Bell didn't theorise about flight. He built paper prototypes, flew kites in the wind, watched what worked and what failed, adjusted, and tried again. The learning came from the doing, from witnessing reality push back against his assumptions.

The same principle holds today. Taking a lean approach to experimentation isn't about avoiding AI—it's about using AI appropriately while protecting the learning that only comes from direct contact with your customers and their problems.

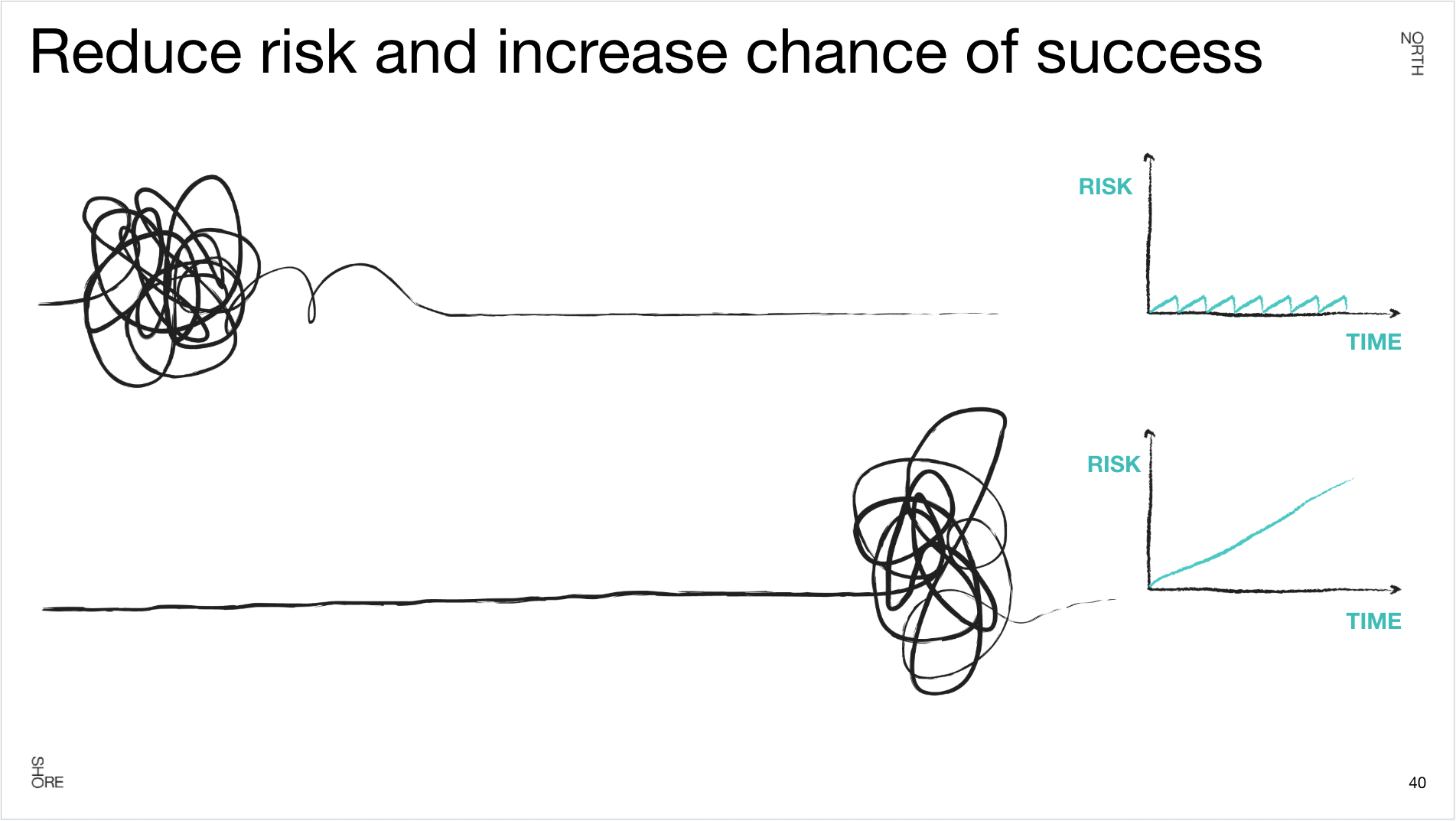

Consider the role of risk that’s involved in developing new products and services. If you get all the way through the development process and wait until your product is released before attempting to mitigate it, your risk is growing exponentially over time.

In contrast, if you create small experiments to test assumptions, you can remove uncertainty and reduce risk on an ongoing basis.

To put it another way: Speed to value is intrinsically linked to speed to learning. The more you invest in learning and experimentation, the better you’re able to manage risk, waste, and uncertainty.

How do you optimise for learning?

The build-measure-learn loop gives you a systematic way to reduce risk: Define your hypothesis, run the smallest viable experiment to test it, then make decisions based on evidence rather than assumptions.

The key is identifying what you need to learn at each stage—from problem definition through solution design to scaling—and designing the fastest, cheapest experiment that removes enough uncertainty to move forward confidently.

Each loop tackles a different question through direct observation and testing—not by asking AI what it thinks customers might do.

Are we solving a real problem? Will this solution actually work? What's the minimum viable version? Can we build a sustainable business model around this? Most teams skip straight to building and hope they've guessed right. But the lean approach acknowledges uncertainty upfront and systematically reduces it.

Here’s how this plays out in practice: Imagine you’re trying to improve the performance of your customer support team. You see that they’re being overwhelmed by repetitive questions and their response times are lagging.

To define your problem, you might come up with a few assumptions, such as:

Customer support spends too much time answering the same questions.

Personalized answers are not important for common questions.

Customers prefer quick, automated answers to slow, personalised ones.

You might then propose a solution hypothesis: using an AI assistant to handle the top 20 most common support questions.

Some of the riskiest assumptions for this solution might include:

Customers will actually use a chatbot vs. insisting on human support

The AI can understand the nuance of our domain-specific questions accurately enough to provide useful answers

Redirecting to human support when needed won't create a worse experience than the current state

Our support team will trust and adopt this tool rather than see it as a threat to their jobs

Next, you could design an experiment before deciding to build anything. You could create a "Wizard of Oz" test by going through the following steps:

Add a chat interface to the support page.

Have actual support team members respond through it (pretending to be AI).

Track: What questions come through? Do customers engage? When do they ask for humans? What accuracy rate satisfies customers?

Run for 2 weeks with 10% of support traffic.

Before you start the test, make sure you define your success criteria. This might be something like:

At least 60% of customers engage with the chat

70% of queries can be resolved without human escalation

Customer satisfaction scores remain at or above current levels

This experiment will help you to learn:

Whether customers actually want this (problem validation)

Which questions are truly answerable vs. need human judgment (solution refinement)

What accuracy threshold is "good enough" (MVP definition)

How customers respond to this tool (business model/adoption risk)

Based on the experiment, you might discover the real problem isn't routine questions—it's that your documentation is hard to find. The MVP then becomes better search and knowledge management, with AI as a supporting tool rather than the primary solution. You've saved 6 months of development and $500K+ by learning first.

A closer look at assumptions and how to test your riskiest ones

Whenever we’re developing a new product or solution, we’re making assumptions about our customers and their behaviour. Going through the build-measure-learn loop gives us the opportunity to test our assumptions.

But we don’t simply list out all our assumptions and test all of them—we’d never get anything done this way! Our goal is to identify the riskiest assumptions so we can mitigate some of that risk through our experiments.

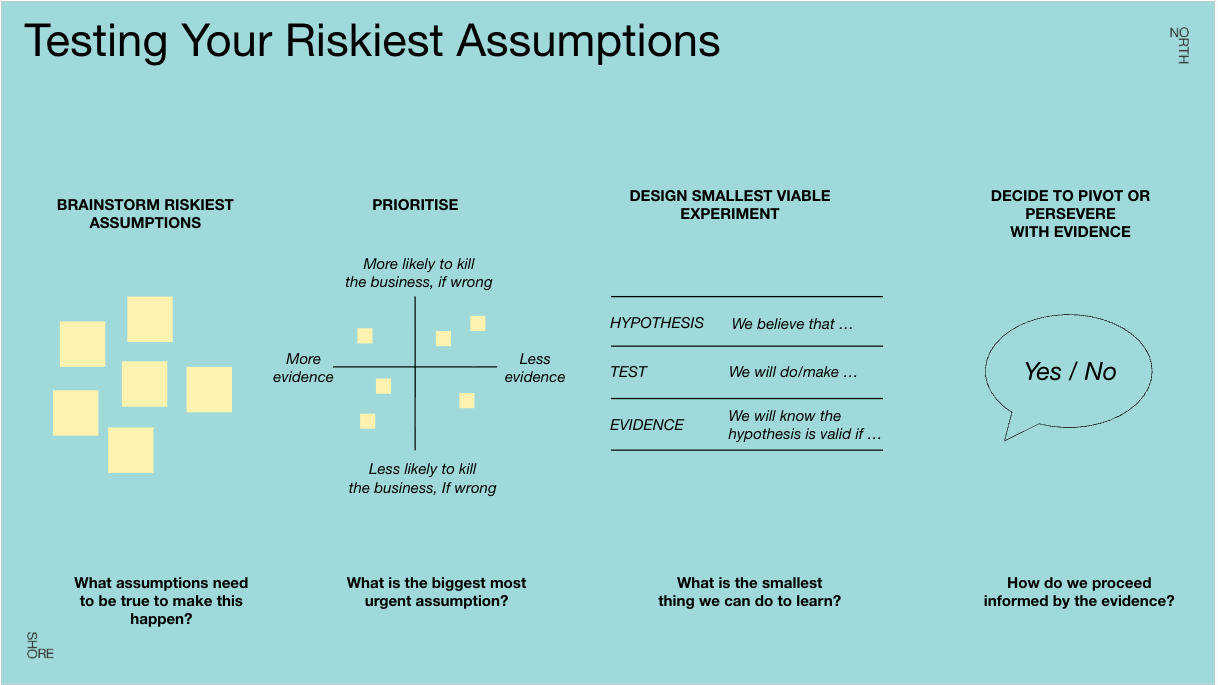

Here’s a simple process to help you identify and test your riskiest assumptions:

Start by brainstorming all the assumptions that need to be true in order for your product to work.

Next, you’ll want to prioritise. It can be helpful to place your assumptions on a 2x2 grid, with one axis representing more evidence to less evidence (the degree to which this is an assumption) and the other axis representing the likelihood of killing your business (the degree of risk). Your goal is to focus your attention on the ideas that involve the greatest amount of risk and the most uncertainty.

Once you’ve narrowed in on a few of the riskiest assumptions, you can start to design the smallest viable experiments. This template can help: We believe that [hypothesis]. We will do/make [test]. We will know if the hypothesis is valid if [evidence].

And based on what you learn from these small tests, you can decide to pivot or persevere with evidence.

Remember: The value of experimentation is in first-hand learning

You now have a simple framework for identifying and testing assumptions, and the foundation for integrating experimentation more deeply into your team’s work. Make sure you resist the temptation to outsource that learning to AI.

Remember: Bell's paper kites taught him about flight because he flew them in real-life conditions and watched them fail. Your experiments will teach you about your customers because you'll watch real people use (or not use) the solutions you’ve designed. There are no shortcuts to this kind of understanding.

I know it’s tempting to bypass the time and energy it takes to find and observe individuals and synthesise all the learning. This is why I’m always being asked for silver-bullet solutions to skip over that effort.

But this point is so important it’s worth repeating—the value of discovery lies in experiencing that learning first-hand. If Alexander Graham Bell had started with the full-powered plane and skipped over all the steps that came before it, his ideas may have never gotten off the ground—literally.

If you’re looking for help building a culture of experimentation in your organisation, Northshore Studio can support you in every stage of experimentation, from defining your problem and solution all the way through your MVP and business model. Get in touch to discuss how we might work together!